Streaming Detection of Queried Event Start

Abstract

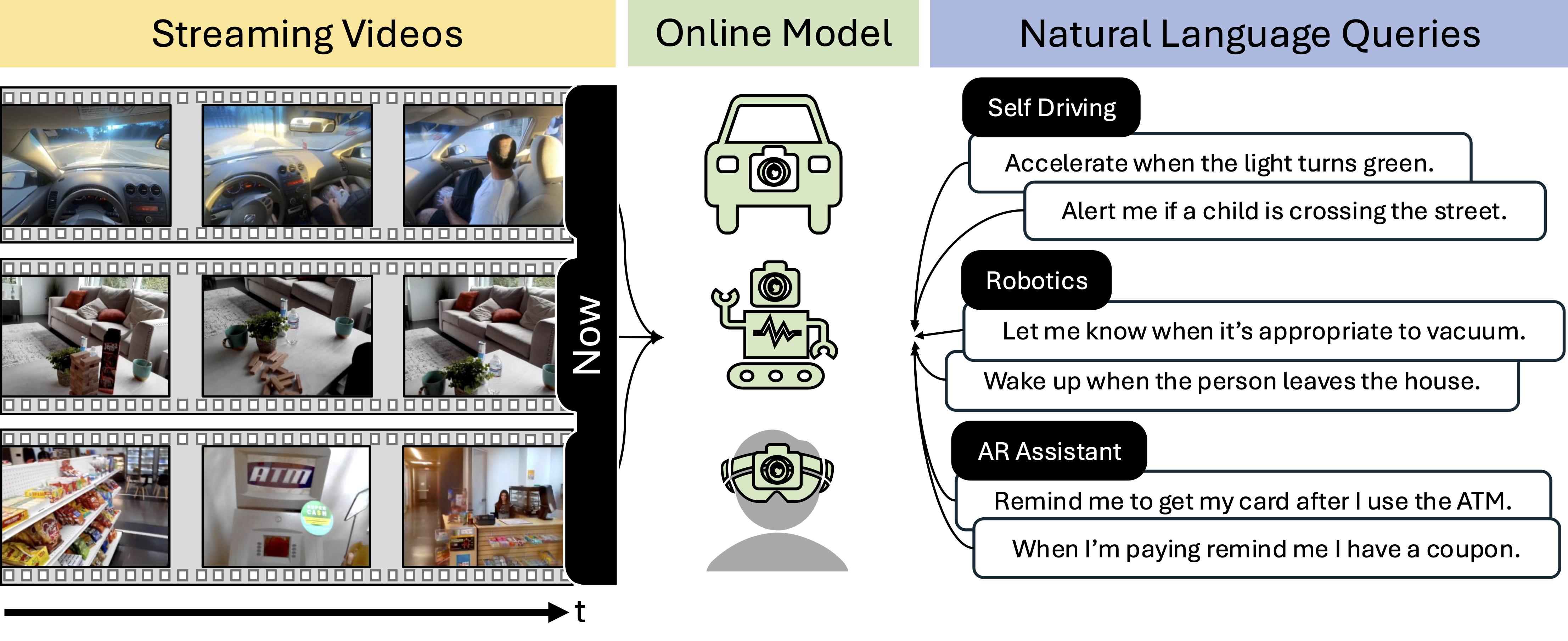

Robotics, autonomous driving, augmented reality, and many embodied computer vision applications must quickly react to user-defined events unfolding in real time. We address this setting by proposing a novel task for multimodal video understanding - Streaming Detection of Quert Event Start (SDQES). The goal of SDQES is to identify the beginning of a complex event as described by a natural language query, with high accuracy and low latency. We introduce a new benchmark based on the Ego4D dataset, as well as new task-specific metrics to study streaming multimodal detection of diverse events in an egocentric video setting. Inspired by parameter-efficient fine-tuning methods in NLP and for video tasks, we propose adapter-based baselines that enable image-to-video transfer learning, allowing for efficient online video modeling. We evaluate three vision-language backbones and three adapter architectures in both short-clip and untrimmed video settings.

Contents

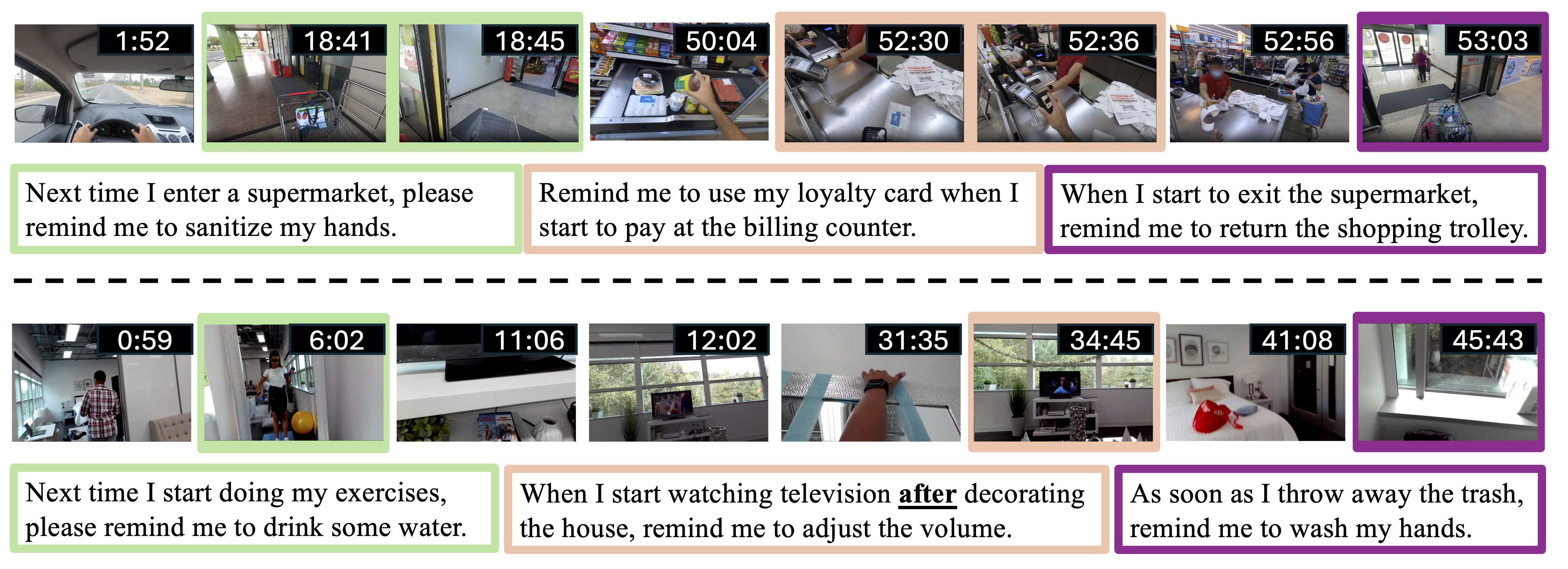

Each instance consists of raw video in .mp4 format coupled with temporally and contextually relevant natural language queries. Each query is accompanied by start and end timestamps in seconds encoded as floating point numbers and a response string. The code for generating the instances is available here.

EgoSDQES has a total of 12767 query annotations spanning 1773 distinct videos divided into training and validation splits such that there is no overlap between training and validation instances. This split is done according to the official Ego4D splits to avoid data contamination when evaluating models trained on Ego4D. The query and response annotations (along with the respective timestamps) is available for inspection and download here.

We take special care to disambiguate repeat instances of events in the queries. Ie. if the event in the query has occurred before we make sure to add additional context (for example, prior context) to the query to make it unique. See the second row in the figure below for an example.

BibTeX

@inproceedings{Eyzaguirre_NeurIPS_2024,

author = {Eyzaguirre, Cristobal and Tang, Eric and Buch, Shyamal and Gaidon, Adrien and Wu, Jiajun and Niebles, Juan Carlos},

title = {Streaming Detection of Queried Event Start},

booktitle = {Advances in Neural Information Processing Systems (NeurIPS), Datasets and Benchmarks Track},

address = {Vancouver, Canada},

month = dec,

year = {2024},

preprint = {2412.03567}

}